Neuromorphic Computing Seminar Report PDF Download

ABSTRACT

Compared with von Neumann’s computer architecture, neuromorphic systems offer more unique and novel solutions to the artificial intelligence discipline. Inspired by biology, this novel system has implemented the theory of human brain modeling by connecting feigned neurons and synapses to reveal the new neuroscience concepts. Many researchers have vastly invested in neuro-inspired models, algorithms, learning approaches, operation systems for the exploration of the neuromorphic system and have implemented many corresponding applications. This paper presents a comprehensive review and focuses extensively on Neuromorphic Computing and its potential advancement in new research applications. Towards the end, we conclude with a broad discussion and a viable plan for the latest application prospects to facilitate developers with a better understanding of Neuromorphic Computing in accordance to build their own artificial intelligence projects.

- Introduction

Nowadays, neuromorphic computing has become a popular architecture of choice instead of von Neumann computing architecture for applications such as cognitive processing. Based on highly connected synthetic neurons and synapses to build biologically inspired methods, which is to achieve theoretical neuroscientific models and challenging machine learning techniques. The von Neumann architecture is the computing standard predominantly for machines. However, it has significant differences in organizational structure, power requirements, and processing capabilities relative to the working model of the human brain [1]. Therefore, neuromorphic calculations have emerged in recent years as an auxiliary architecture for the von Neumann system. Neuromorphic calculations are applied to create a programming framework. The system can learn and create applications from these computations to simulate neuromorphic functions. These can be defined as neuro-inspired models, algorithms and learning methods, hardware and equipment, support systems and applications [2].

Neuromorphic architectures have several significant and special requirements, such as higher connection and parallelism, low power consumption, memory collocation and processing [3]. Its strong ability to execute complex computational speeds compared to traditional von Neumann architectures, saving power and smaller size of the footprint. These features are the bottleneck of the von Neumann architecture, so the neuromorphic architecture will be considered as an appropriate choice for implementing machine learning algorithms [4].

There are ten main motivations for using neuromorphic architecture, including Real-time performance, Parallelism,von Neumann Bottleneck, Scalability, Low power, Footprint, Fault Tolerance, Faster, Online Learning and Neuroscience [1]. Among them, real-time performance is the main driving force of the neuromotor system. Through parallelism and hardware-accelerated computing, these devices are often able to perform neural network computing applications faster than von Neumann architectures [5]. In recent years, the more focused area for neuromorphic system development has been low power consumption [5] [6] [7]. Biological neural networks are fundamentally asynchronous [8], and the brain’s efficient data-driven can be based on event-based computational models [9]. However, managing the communication of asynchronous and event-based tasks in large systems is a challenge in the von Neumann architecture [10]. The hardware implementation of neuromorphic computing is favourable to the large-scale parallel computing architecture as it includes both processing memory and computation in the neuron nodes and achieves ultra-low power consumption in the data processing. Moreover, it is easy to obtain a large scale neural network based on the scalability. Because of all aforementioned advantages, it is better to consider the neuromorphic architecture than von Neuman for hardware implementation [11].

The basic problem with neuromorphic calculations is how to structure the neural network model. The composition of biological neurons is usually composed of cell bodies, axons, and dendrites. The neuron models implemented by each component of the specified model are divided into five groups, based on the type of model being distinguished by biologically and computationally driven.

- ARTIFICIAL NEURAL NETWORKS

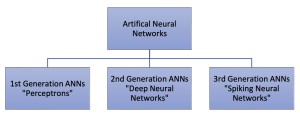

An Artificial Neural Network (ANN) is a combination and collection of nodes that are inspired by the biological human brain. The objective of ANN is to perform cognitive functions such as problem-solving and machine learning. The mathematical models of the ANN were started in the 1940s; however, it was silent for a long time (Maass, 1997). Nowadays, ANNs became very popular with the success of ImageNet2 in 2009 (Hongming, et al., 2018). The reason behind this is the developments in ANN models and hardware systems that can handle and implement these models. (Sugiarto & Pasila, 2018) The ANNs can be separated into three generations based on their computational units and performance (Figure 1).

Figure 1- Generations of Artificial Neural Networks

The first generation of the ANNs started in 1943 with the work of Mc-Culloch and Pitts (Sugiarto & Pasila, 2018). Their work was based on a computational model for neural networks where each neuron is called “perceptron”. Their model later was improved with extra hidden layers (Multi-Layer Perceptron) for better accuracy – called MAGDALENE – by Widrow and his students in the 1960s (Widrow & Lehr, 1990). However, the first generation ANNs were far from biological models and were just giving digital outputs. Basically, they were decision trees based on if and else conditions. The Second generation of ANNs contributed to the previous generation by applying functions into the decision trees of the first-generation models. The functions work among each visible and hidden layer of perceptron and create the structure called “deep neural networks”. (Patterson, 2012; Camuñas-Mesa, et al., 2019) Therefore, second-generation models are closer to biological neural networks. The functions of the second-generation models are still an active area of research and the existing models are in great demand from markets and science. Most of the current developments about artificial intelligence (AI) are based on these second-generation models and they have proven their accuracy in cognitive processes. (Zheng & Mazumder, 2020)

The Third generation of ANN is termed as Spiking Neural Networks (SNNs). They are biologically inspired structures where information is represented as binary events (spikes). Their learning mechanism is different from previous generations and is inspired by the principles of the brain (Kasabov, 2019). SNNs are independent of the clock-cycle based fire mechanism. They do give an output (spike) if the neurons collect enough data to surpass the internal threshold. Moreover, neuron structures can work in parallel (Sugiarto & Pasila, 2018). In theory, thanks to these two features SNNs consume less energy and work faster than second-generation ANNs (Maass, 1997).

The advantages of SSNs over ANNs are: (Kasabov, 2019)

- Efficient modeling of temporal – spatio temporal or spectro temporal – data

- Efficient modeling of processes that involve different time scales

- Bridging higher-level functions and “lower” level genetics

- Integration of modalities, such as sound and vision, in one system

- Predictive modeling and event prediction

- Fast and massively parallel information processing

- Compact information processing

- Scalable structures (from tens to billions of spiking neurons)

- Low energy consumption, if implemented on neuromorphic platforms

- Deep learning and deep knowledge representation in brain-inspired (BI) SNN

- Enabling the development of BI-AI when using brain-inspired SNN

Although there seems to be a lot of advantages of SNNs compared to ANNs (Table 2), advances in associated microchips technology, which gradually allows scientist to implement such complex structures and discover new learning algorithms (Lee, et al., 2016) (Furber, 2016), are still very recent (after the 2010s). Spiking Neural Networks Technology, with only ten-year implementation in the area, is relatively young, therefore, compared to the second generation. So, it needs to be further researched and more intensively implemented to leverage more efficiently and effectively its advantages.

Do you need help? Talk to us right now: (+234) 08060082010, 08107932631 (Call/WhatsApp). Email: [email protected].IF YOU CAN'T FIND YOUR TOPIC, CLICK HERE TO HIRE A WRITER»